There was recently an article in the New York Times concerning the ongoing debate over standardized testing, specifically about the use of SAT and ACT testing in the college admissions process. The use of these tests has been debated for years, but during the pandemic, when in-person testing became impossible, many educational systems decided to remove this requirement and have simply not reinstated them.

The point of the article was that, despite many concerns the exams themselves were biased in any number of ways, the use of standardized testing scores in institutions requiring them has actually increased the diversity of the student population (across all factors, race/sex/socioeconomic/etc.) over virtually any other means of admission standard. In addition, the article points out that what many people see as bias in the tests themselves is likely misplaced: the tests accurately predict what they are intended to predict regardless of race and economics, namely, will the student do well in college or not.

Herein lies, perhaps, one of the most misunderstood aspects of standardized testing … they can only reliably predict what they are intended to predict and nothing else. As a practicing academic who spends much of his days working on standardized testing programs in the technology industry, I am constantly confronted with these misconceptions.

What are Standardized Tests?

The first thing to understand is what, exactly, standardized testing actually is. In short, standardized tests are specifically built to predict some aspect of the individual taking the assessment. In the case of the ACT and SAT exams, they are designed to predict how well the individual will do in the university setting, and nothing more. In addition, by “predict”, I mean that they make a statistical inference, not an absolute determination, as they are based on statistical science which describes a “group”, not any one individual. They do not specifically measure real world capability. They do not measure overall intelligence. They only measure/predict what they are designed to do.

Two key aspects of this are “Validity” and “Reliability”. Validity is a measure of how well an assessment does what it says it does. Does a high score on the exam actually predict what was intended, or more succinctly “are we measuring what we said we were”. Reliability is a measure of whether the same individual, taking the same assessment, consistently scores the same without any other changes (like preparation, training, etc.); i.e., does the test make the same prediction every time it is used without any other factors affecting the results.

Despite what critics say, the SAT and ACT exams have both been proven to be valid predictors of what they measure with high reliability. My score will accurately (withing statistical deviations) predict my ability to be successful in college and my score will be fairly consistent across multiple attempts unless I do something to change my innate ability. As the NYT article points out, this remains true: the higher you score on these exams, the better your academic results in post-secondary institutions. The fact there is a significant discrepancy in scores based on race, socioeconomic situation, or any other factor is, frankly, irrelevant to the validity and reliability of the exam. Using the results of these exams in any context other than how they were designed is an invalid use.

The Legacy of Mistrust

These basic misunderstandings of standardized testing breeds mistrust and suspicion in what they do and how they are used. This is nothing new and likely stems for the development and use of assessments from the past. The original intelligence quotient (IQ) test developed around the turn of the 20th century is subject to the same issues, including suggestions of racial and socioeconomic bias. In part this is because the IQ test is not actually a valid predictor of intelligence or the ability to perform successfully, but like the SAT and ACT exams, research has showed it is a predictor of success in primary and secondary educational environments. Unfortunately, this was not fully understood when the assessment was born and IQ has been misused in ways that actually have contributed to societal bias. This is the legacy that still follows standardized testing.

It is bad design, the misuse of standardized testing results, and the misinterpretation of those results that causes such spirited debate. In the case of the original IQ test, it was originally purported to determine innate intelligence, but was actually a predictor of primary/secondary educational success. Furthermore, research suggests that IQ is a poor predictor of virtually anything else, including an individual’s ability to succeed in life. This is a validity issue; meaning that it did not measure what it purported to measure. Due to the validity issue, IQ testing was then misused to further propagate racial and socioeconomic inequity, by suggesting that different races, or different classes were just “less intelligent” than others, prompting stereotypes and prejudice that simply wasn’t founded.

Given this legacy, it is easy to understand why many mistrust standardized tests and believe they are the problem, rather than a symptom of a larger problem.

The Real Issue is NOT Standardized Testing

The conversation around standardized testing has suggested the reason for racial and socioeconomic disparity is due to bias within the testing. However, if we can accept standardized tests (at least ones that are well designed to have validity and reliability) simple make a prediction, and that the SAT and ACT, in particular, make accurate predictions of a student’s ability to succeed in post-secondary education, the real question is why is there a significant disparity in results based on race and socioeconomic background? Similarly, why did the original IQ testing accurately predict primary/secondary educational outcomes, but also suffer from the same disparity? The real question is: Why can’t students from diverse backgrounds equally succeed in our education system?

The answer is rather simple and voluminous SAT and ACT data clearly indicate this: there is racial and socioeconomic disparity built into the educational systems. This is a clear issue of systemic bias; your chances of success within the system are greatly affected by race and socioeconomic background. Either what we are teaching, or how we are evaluating performance, is not equitable to all students. This is the issue we should be having conversations about, research conducted, and action taken. Continuing the debate, or simply eliminating standardized testing, is not going to affect the bigger issue. If anything, eliminating SAT and ACT testing will help hide the issue because we will no longer have such clear, documented evidence of the disparity. I don’t want to start any conspiracy theories, but maybe this is one reason so few educational systems are willing to reinstate ACT and SAT testing as part of their admissions requirements, especially when the research suggests they are better criteria for improving diversity than other existing means. They may be imperfect, but it is not the assessment’s fault, it is the system’s fault.

How to Improve?

First, I want to be clear: I don’t have any specific, research-based solutions. So, before I offer any suggestions based on my years of being in the educational system as a student, my years of raising children going through the educational system, and over a decade working with standardized test design and delivery, I want to emphasize that the best thing we can do to improve is simply to change the conversation away from the standardized tests and focus on the educational system itself. We need research to determine where the issue actually exists; is it what we teach, or how we measure performance? That MUST be the first step.

That being said, when it comes to “how we measure performance”, based on my background, education, and experience, I’m going to make a radical suggestion: more standardized testing. I know, I know. Our students are already inundated with standardized testing, but hear me out. While standardized test are frequently used in our education system, they are rarely used to measure an individual student’s performance when it comes to grades (the ultimate indicator of success within the system), but as an assessment of the overall school’s performance. My suggestion is that these standardized tests may be a more equitable way to evaluate performance for the individual as well.

From an equity standpoint, while there are some proven correlations between individual test scores on the US National Assessment of Education Progress (NAEP) assessment and those individual’s ACT/SAT scores, the correlations were not perfect. In addition, correlations were weaker across racial/ethnic minorities and low-income students. NAEP scores have also shown positive correlation with post-secondary outcomes, although they were not the only factor. Finally, since the NAEP assessment began in 1990, the disparity in scores based on racial and socioeconomic differentiation has significantly diminished. This suggests the NAEP assessment may actually be better at determining the student’s capability, rather then just predicting their post-secondary success, while also having some ability to predict success. Yet, NAEP assessments are not used in any way to actually grade the student’s performance. At the very least, NAEP results may be a viable way to augment current admissions and similarly reduce the racial and socioeconomic disparity. They may also be a better way to measure “success” in the primary/secondary educational system than current methods, leading me to my next point.

The reality is that well-constructed, standardized assessments with proven validity and reliability are NOT how most of our students are evaluated today. Across the primary, secondary, post-secondary, and graduate levels, our students are routinely evaluated based on individual teacher developed assessments and/or subjective performance criteria. Those teachers are inadequately trained in how to design, make, and validate psychometrically sound assessments (with validity and reliability); and, as such, the instruments used to gauge student performance routinely do not meassure that performance. Without properly constructed assessments, our students are more likely to be measured on their English proficiency, cultural background, or simply whether they can decipher what the instructor was trying to say, rather than the knowledge they have about the topic. Subjective evaluations (like those used for essay responses) are routinely shown to be biased and rarely give credence to novel or innovative thought; even professional evaluators trained to remove bias, like those used in college admissions, routinely make systematic errors in evaluation. Subjective assessments, in my personal and professional opinion, are fraught with inequity and bias that cannot be effectively eliminated. Furthermore, I can personally report that educational systems do not care, if the reaction to my numerous criticisms is any indication. Standardized testing would address this issue and, as we’ve seen with the NAEP, likely do a much better job of making more equitable and fair performance assessments across students.

On top of that, our students’ performance is also often judged on things like home work, attendance, and work-products created in the process of learning, rather than on what they have learned or know. This misses the point, and likely exacerbates the disparity in “success” in our educational system. Single-parent and low-economic homes, which also tend to be more racial segmented, can have dramatic effects on these types of assessments. First, you are out-sourcing the learning to an environment you cannot control where some students may gain experiential knowledge growth, but others cannot; second, you compound that by further penalizing those who cannot with poor grades. While some students/parents (regardless of situation) may still engage in learning/experience outside the classroom, making it mandatory and grading on it likely contributes to the disparity giving those students in the best situations with an unfair advantage. Finally, from my own research into the development of expertise, I know that not all students require as much experiential learning to master the knowledge. The development of expert knowledge is idiosyncratic, some require more while some require less. As such, we should not be measuring performance on how the knowledge is obtained, and focus more on whether they have it or not.

I know the legacy of mistrust will make this a hard stance for people to support, but the use of standardized testing for assessing student performance would address a number of significant issues in current practices. It can be less biased, provide more consistent results across schools, and if used in place of subjective or other non-performance criteria, be a more accurate reflection of student capability.

Conclusion

Standardized testing, especially the behind-the-scenes work done to properly create them, is a mystery to most people. When you add historical misuse and abuse of standardized testing, it is easy to see why many demonize them and question the results. The reality though, is that well constructed assessments, used properly, can not only help us uncover issues in society, but also help us address those issues. The data on SAT/ACT scores, both their ability to predict academic performance, as well as the disparity in scores across racial and socioeconomic background are a clear signal to the real problem: the racial and socioeconomic bias built into the education system. The education systems definition of “success”, or how it is determined is clearly biased. As such, we should not push to eliminate standardized testing, but look to see how we can improve our definition and measurement of success by doubling down on standardized testing instead of how we do it today.

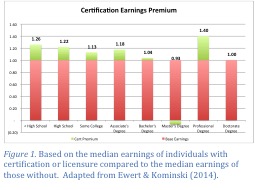

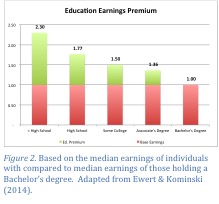

certification versus a Bachelor’s degree seems relevant. According to the U.S. Census Bureau (Ewert & Kominski, 2014), with the exception of a Master’s degree, there is an earnings premium for achieving certification or licensure regardless of education level. This premium predominantly benefits those with less post-secondary educational investment (see Figure 1). While it is true the earnings premium for having a Bachelor’s degree is much greater (see Figure 2), this is not a measure of return on investment. Return on investment is a measure of what you get for what you put in; i.e. ROI is the amount you can expect to get back for every dollar spent. This is where the ROI of certification is substantially better.

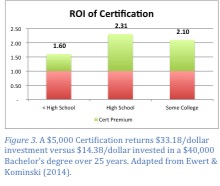

certification versus a Bachelor’s degree seems relevant. According to the U.S. Census Bureau (Ewert & Kominski, 2014), with the exception of a Master’s degree, there is an earnings premium for achieving certification or licensure regardless of education level. This premium predominantly benefits those with less post-secondary educational investment (see Figure 1). While it is true the earnings premium for having a Bachelor’s degree is much greater (see Figure 2), this is not a measure of return on investment. Return on investment is a measure of what you get for what you put in; i.e. ROI is the amount you can expect to get back for every dollar spent. This is where the ROI of certification is substantially better. of $5,000 to achieve certification (probably a high estimate), the ROI of achieving certification for someone with only a high school education is 2.3 times that of achieving a Bachelor’s degree (see Figure 3). Furthermore, this is just a starting point as it doesn’t account for differences in earning while those achieving a Bachelor’s degree remain in school or the cost of interest on student loans for college tuition. The fact is, certification provides individuals an extremely efficient mechanism to improve their earnings potential, and achieve the post-secondary credentials that improve their ability to get and keep a

of $5,000 to achieve certification (probably a high estimate), the ROI of achieving certification for someone with only a high school education is 2.3 times that of achieving a Bachelor’s degree (see Figure 3). Furthermore, this is just a starting point as it doesn’t account for differences in earning while those achieving a Bachelor’s degree remain in school or the cost of interest on student loans for college tuition. The fact is, certification provides individuals an extremely efficient mechanism to improve their earnings potential, and achieve the post-secondary credentials that improve their ability to get and keep a  job, even during tough economic times. The fact that IBCs add value to both those without other post-secondary education as well as those with, also demonstrates the greater flexibility of certifications.

job, even during tough economic times. The fact that IBCs add value to both those without other post-secondary education as well as those with, also demonstrates the greater flexibility of certifications.