Research in the field of expertise and expert performance suggest experts not only have the capacity to know more, they also know it differently than non-experts; experts employ different mental models than novices (Feltovich, Prietula, & Ericsson, 2006). While it remains unclear how antecedents directly affect the generation of mental models, the relationship between mental models and performance is demonstrated across multiple domains of research (Chi, Glaser, & Rees, 1982; Feltovich et al., 2006). Unlike attempts to directly elicit the antecedents of performance that may, or may not, contribute to future performance, the mental models of experts show stable and reliable differences in expert performance without requiring the artificial constructs of tacit knowledge measurements (Frank, Land, & Schack, 2013; Land, Frank, & Schack, 2014; Lex, Essig, Knoblauch, & Schack, 2015; Schack, 2004, 2012; Schack & Mechsner, 2006). The potential to accurately, easily, and quantifiably define job-related expertise is an organizational opportunity for both the accumulation as well as the management of talent.

What are Mental Models

Based on information processing and cognitive science theories, mental models are the cognitive organization of knowledge in long-term memory (LTM) developed through learning and experience (Chase & Simon, 1973; Chi et al., 1982; Gogus, 2013; Insch, McIntyre, & Dawley, 2008; Schack, 2004). Mental models represent how individuals organize and implement knowledge, instead of explicitly determining what that knowledge encompasses. Novice practitioners start with mental models consisting of the most basic elements of knowledge required, and their mental models gradually gain complexity and refinement as the novice gains practical experience applying those models in the real world (Chase & Simon, 1973; Chi et al., 1982; Gogus, 2013; Insch et al., 2008; Schack, 2004). Consequently, achieving expertise is not simply a matter of accumulating knowledge and skills, but a complex transformation of the way knowledge and skill is implemented (Feltovich et al., 2006). This distinction, between what the individual knows and how the individual applies that knowledge has theoretical as well as practical importance for use in human assessment.

Mental models capture important aspects that plagued prior attempts to assess human capital performance. In contrast to prior assessment methods, differences in mental models propose to demonstrate differences in the way individuals apply knowledge cognitively, rather than differences in the knowledge itself (Chi et al., 1982; Gogus, 2013; Insch et al., 2008). The significance of these findings is the implication of a measurable basis for the difference in performance between expert and novice, substantiating mental models as the quintessential construct defining the difference between the knowledge an individual has versus how the individual applies that knowledge.

Evaluating mental models from a practical perspective, mental models clearly differentiate between expert and non-experts. Chase and Simon (1973) first theorized that the way experts chunk and sequence information mediated their superior performance. Simon and Chase found grand master chess players’ superior performance resulted from recalling more complex information chunks. These authors demonstrated that both experts and novices could recall the same number of chunks, but the chunks of novices contained single chess pieces whereas the chunks of experts contained meaningful chess positions composed of numerous pieces. Simon and Chase further showed this superior performance to be context sensitive and domain specific as grand masters were no better than novices at recalling random, non-game specific piece constellations and showed no better performance in non-chess related memory. The domain dependency indicates mental models of performance are not universal predictors but have job-related specificity making them ideal for assessment.

The observation that expert and novices store and access domain-specific knowledge differently spawned research theorizing quantitative, measurable differences in knowledge representation and organization might differentiate expert performance from non-expert performance (Ericsson, 2006). This research continues to substantiate increased experience and practice as the driver in the development of larger, more complex cognitive chunks (Feltovich et al., 2006). Feltovich et al. (2006) noted this effect as one of the best-established characteristics of expertise and demonstrated in numerous knowledge domains including chess, bridge, electronics, physics problem solving, and medical applications. Feltovich et al. suggested these changes facilitated experts processing more information faster, with less cognitive effort thus contributing to greater performance.

Evolution of Mental Model Evaluation

The conceptualization of evaluating expert performance in academic and business domains already indicates the importance of mental model differences (Chi et al., 1982; Insch et al., 2008; Jafari, Akhavan, & Nourizadeh, 2013). The general acceptance of mental models as a critical discriminator of performance has driven a deeper focus on the nature and structure of these differences instead of the specific knowledge they represent (Gogus, 2013). This evolution of mental model evaluation, from a theoretical construct to a quantitative measure, mirrors the evolution away from what individuals know, towards how individuals utilize that knowledge.

Studies of expertise and expert performance demonstrate the dramatic differences in the way experts and novices organize knowledge in complex physics problem solving a (Chi et al., 1982). Chi et al. (1982) utilized cluster analysis to show differences in the way experts and novices structure their knowledge; however, mental models were only one of several ways in which the authors analyzed expert and novice differences.

Acknowledgment of these differences in mental representations rationalized the use of mental models in constructing more traditional tacit knowledge measures (Insch et al., 2008). Insch et al. (2008) approached tacit knowledge measures through evaluation of the actions individuals performed, acknowledging tacit knowledge was inherently how individuals use knowledge, not necessarily what knowledge they had. In taking this approach, the authors focused on the mental schemas that directed behavior instead of the antecedent values, beliefs and skills that contribute to performance. The focus on schemas as the driving factor in performance is notable as divergent from prior tacit knowledge measures; however, Insch et al. did not attempt measuring and comparing resultant mental models explicitly.

More recently, Jafari et al. (2013) looked to elicit and visualize the tacit knowledge of Iranian autoworkers concerning their knowledge of organizational strengths. The uniqueness of this study was the use of quantifiable measures of individual tacit knowledge for comparison between groups of individuals and purported experts, as well as the use of graphs to visualize the results for each group. Jafari et al. stipulated differences in mental models as an indication of differences between novice and expert workers but focused on the content rather than the structure of the mental model. The authors further operationalized the quantitative measures as differences in what the individuals knew, and not how they utilized or implemented the knowledge. This approach advanced the use of mental models in the identification of expert knowledge, yet failed to identify how these models differ regarding application or structure.

Other researchers focused more on the differences in comparative mental models than the specific knowledge represented within the models (Gogus, 2013). In evaluating the applicability and reliability of different methods of eliciting and comparing mental models, Gogus (2013) suggested the theoretical and methodological approach to the analysis of mental models is independent of the domain of knowledge. Gogus replicated and contrasted the use of two different methodologies for externalization and measurement of mental model differences. Of particular note, the author focused on contrasting the features of mental models instead of on the specific knowledge, experience, attitude, beliefs, or values of participants. These efforts further support differences in mental models as being more dependent on the tacit rather than explicit knowledge of the individual. Since mental models are inherently domain specific and often contain the same base explicit knowledge, structural differences in the mental models between experts and novices are more indicative of the differences in performance.

Research in the area of sports psychology has similarly focused on developing reliable means of differentiating mental models of individuals to differentiate performance and diagnose performance problems. Distinct differences in the mental models between experts and novices have been documented across multiple action-oriented skills including tennis (Schack & Mechsner, 2006), soccer (Lex et al., 2015), volleyball (Schack, 2012), and golf (Frank et al., 2013; Land et al., 2014). Schack and Mechsner (2006) demonstrated how differences in the mental models of the tennis serve related to the level of expertise. Lex et al. (2015) evaluated the differences in the mental models of team-specific tactics between players of varying levels of experience. Less experienced players averaging 3.2 years of experience (n = 20, SD = 4.2) generated mental models viewing team-tactics broadly as either offensive or defensive. More experienced players averaging 17.3 years of experience (n = 18, SD = 3.3), further differentiated offensive and defensive tactics into smaller groups of related actions. For instance, more experienced players further segmented defensive tactics into actions for pressing the offense, and returning to standard defense.

Focus on the specific differences in the structure of mental models has not only proven effective in differentiating expert and novice performance but also provided insight into effective training regimens (Frank et al., 2013; Land et al., 2014; Weigelt, Ahlmeyer, Lex, & Schack, 2011). Frank et al. (2013) compared the models of novice performers to those of experts prior to and following a training intervention. The authors experimentally evaluated two randomly assigned groups of participants with no former experience in performing a golf putt. With the exception of an initial training video provided to all participants, none received any training or feedback. The experimental group participated in self-directed practice over a three-day period, while the control group did not practice at all. Frank et al. found the mental models of participants subjected to practice evolved, becoming more similar to expert mental models than participants in the control group. Since the formal knowledge of all participants remained the same, the outcome of this study further suggests the structure of individual mental models is dependent on the experience and tacit knowledge of the individual.

The Opportunity

The use of mental models to identify expertise shows great promise. Variations in mental model construction differentiate clearly between expert and novice performers across numerous domains of knowledge. Furthermore, methodologies highlighting the structural differences between the mental models of experts and novices show promise in the development and evaluation of training regimens. As a result, the development of human capital assessments based on the measurement of the structural differences between mental models represents a strategic opportunity for organizations to improve the quality of human capital selection as well as the development and assessment of existing human capital.

References

Chase, W. G., & Simon, H. A. (1973). The mind’s eye in chess. In Visual Information Processing (pp. 215–281). New York, NY: Academic Press, Inc. http://doi.org/10.1016/B978-0-12-170150-5.50011-1

Chi, M. T. H., Glaser, R., & Rees, E. (1982). Expertise in problem solving. In R. J. Sternberg (Ed.), Advances in the psychology of human intelligence (Vol. 1, pp. 7–75). Hillsdale: Lawrence Erlbaum Associates.

Ericsson, K. A. (2006). An introduction to Cambridge handbook of expertise and expert performance: Its development, organization, and content. In The Cambridge handbook of expertise and expert …. New York, NY: Cambridge University Press.

Feltovich, P. J., Prietula, M. J., & Ericsson, K. A. (2006). Studies of expertise from psychological perspectives. In The Cambridge handbook of expertise and expert …. New York, NY: Cambridge University Press.

Frank, C., Land, W., & Schack, T. (2013). Mental representation and learning: The influence of practice on the development of mental representation structure in complex action. Psychology of Sport and Exercise, 14(3), 353–361. http://doi.org/10.1016/j.psychsport.2012.12.001

Gogus, A. (2013). Evaluating mental models in mathematics: A comparison of methods. Educational Technology Research and Development, 61(2), 171–195. http://doi.org/10.1007/s11423-012-9281-2

Insch, G. S., McIntyre, N., & Dawley, D. (2008). Tacit Knowledge: A Refinement and Empirical Test of the Academic Tacit Knowledge Scale. The Journal of Psychology, 142(6), 561–579. http://doi.org/10.3200/jrlp.142.6.561-580

Jafari, M., Akhavan, P., & Nourizadeh, M. (2013). Classification of human resources based on measurement of tacit knowledge. The Journal of Management Development, 32(4), 376–403. http://doi.org/http://dx.doi.org/10.1108/02621711311326374

Land, W. M., Frank, C., & Schack, T. (2014). The influence of attentional focus on the development of skill representation in a complex action. Psychology of Sport and Exercise, 15(1), 30–38. http://doi.org/10.1016/j.psychsport.2013.09.006

Lex, H., Essig, K., Knoblauch, A., & Schack, T. (2015). Cognitive Representations and Cognitive Processing of Team-Specific Tactics in Soccer. PLoS ONE, 10(2), 1–19. http://doi.org/10.1371/journal.pone.0118219

Schack, T. (2004). Knowledge and performance in action. Journal of Knowledge Management, 8(4), 38–53. http://doi.org/10.1108/13673270410548478

Schack, T. (2012). Measuring mental representations. In G. Tenenbaum, R. Eklund, & A. Kamata (Eds.), Measurement in Sport and Exercise Psychology (pp. 203–214). Champaign, IL: Human Kinetics. Retrieved from http://www.uni-bielefeld.de/sport/arbeitsbereiche/ab_ii/publications/pub_pdf_archive/Schack (2012) Mental representation Handb

Schack, T., Essig, K., Frank, C., & Koester, D. (2014). Mental representation and motor imagery training. Frontiers in Human Neuroscience, 8(May), 328. http://doi.org/10.3389/fnhum.2014.00328

Schack, T., & Mechsner, F. (2006). Representation of motor skills in human long-term memory. Neuroscience Letters, 391(3), 77–81. http://doi.org/10.1016/j.neulet.2005.10.009

Weigelt, M., Ahlmeyer, T., Lex, H., & Schack, T. (2011). The cognitive representation of a throwing technique in judo experts – Technological ways for individual skill diagnostics in high-performance sports. Psychology of Sport and Exercise, 12(3), 231–235. http://doi.org/http://dx.doi.org/10.1016/j.psychsport.2010.11.001

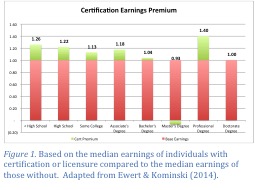

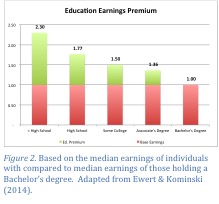

certification versus a Bachelor’s degree seems relevant. According to the U.S. Census Bureau (Ewert & Kominski, 2014), with the exception of a Master’s degree, there is an earnings premium for achieving certification or licensure regardless of education level. This premium predominantly benefits those with less post-secondary educational investment (see Figure 1). While it is true the earnings premium for having a Bachelor’s degree is much greater (see Figure 2), this is not a measure of return on investment. Return on investment is a measure of what you get for what you put in; i.e. ROI is the amount you can expect to get back for every dollar spent. This is where the ROI of certification is substantially better.

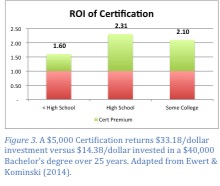

certification versus a Bachelor’s degree seems relevant. According to the U.S. Census Bureau (Ewert & Kominski, 2014), with the exception of a Master’s degree, there is an earnings premium for achieving certification or licensure regardless of education level. This premium predominantly benefits those with less post-secondary educational investment (see Figure 1). While it is true the earnings premium for having a Bachelor’s degree is much greater (see Figure 2), this is not a measure of return on investment. Return on investment is a measure of what you get for what you put in; i.e. ROI is the amount you can expect to get back for every dollar spent. This is where the ROI of certification is substantially better. of $5,000 to achieve certification (probably a high estimate), the ROI of achieving certification for someone with only a high school education is 2.3 times that of achieving a Bachelor’s degree (see Figure 3). Furthermore, this is just a starting point as it doesn’t account for differences in earning while those achieving a Bachelor’s degree remain in school or the cost of interest on student loans for college tuition. The fact is, certification provides individuals an extremely efficient mechanism to improve their earnings potential, and achieve the post-secondary credentials that improve their ability to get and keep a

of $5,000 to achieve certification (probably a high estimate), the ROI of achieving certification for someone with only a high school education is 2.3 times that of achieving a Bachelor’s degree (see Figure 3). Furthermore, this is just a starting point as it doesn’t account for differences in earning while those achieving a Bachelor’s degree remain in school or the cost of interest on student loans for college tuition. The fact is, certification provides individuals an extremely efficient mechanism to improve their earnings potential, and achieve the post-secondary credentials that improve their ability to get and keep a  job, even during tough economic times. The fact that IBCs add value to both those without other post-secondary education as well as those with, also demonstrates the greater flexibility of certifications.

job, even during tough economic times. The fact that IBCs add value to both those without other post-secondary education as well as those with, also demonstrates the greater flexibility of certifications.